BabyLM Challenge Award at EMNLP 2025 Workshop!

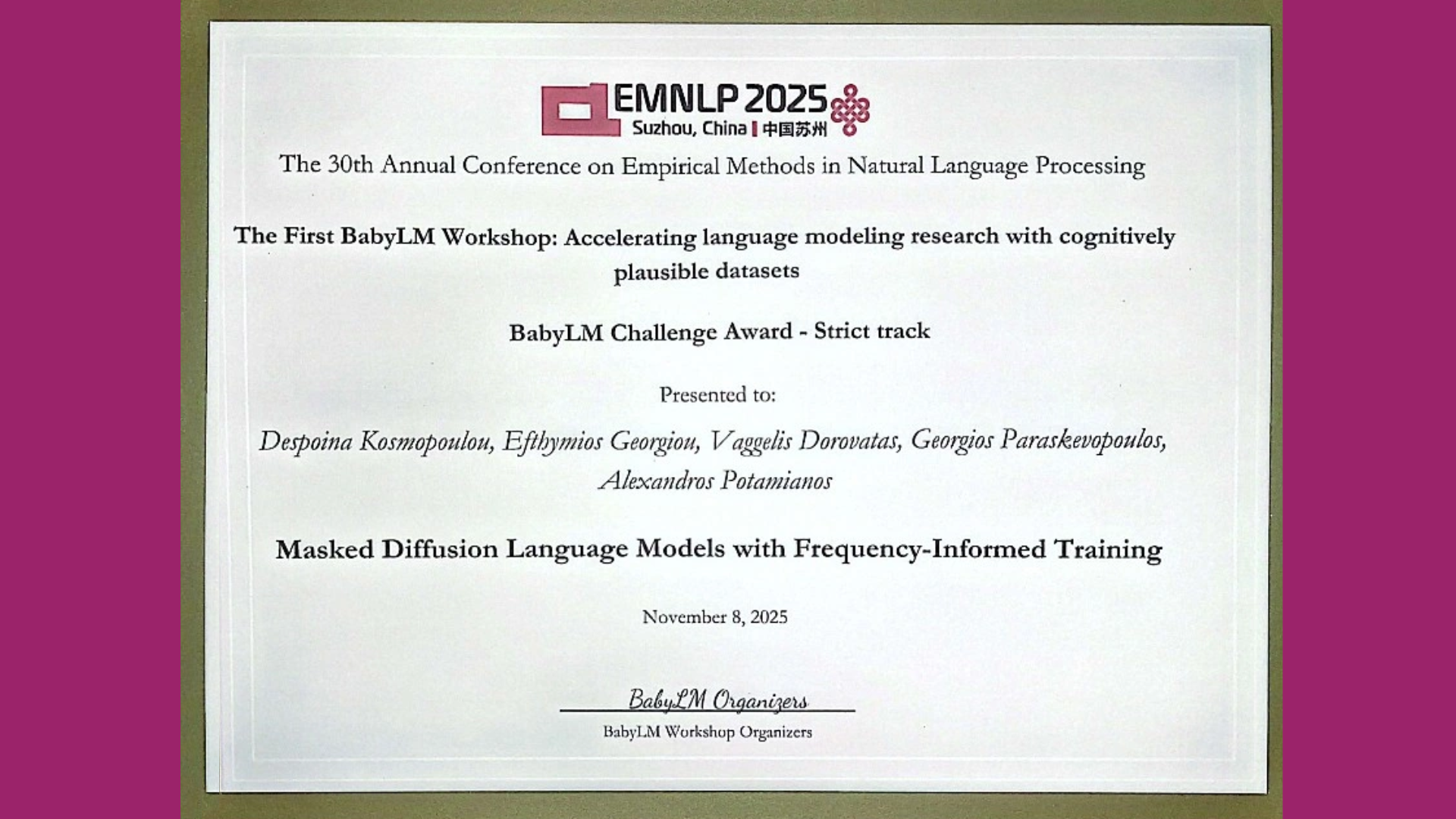

Researchers Despoina Kosmopoulou, Efthymios Georgiou, Vaggelis Dorovatas, Georgios Paraskevopoulos, and Alexandros Potamianos from Archimedes, Athena Research Center, Greece, from the National Technical University of Athens, Greece, the University of Bern, Switzerland and the Institute of Language and Signal Processing (ILSP) of the Athena Research Center, Greece, received the BabyLM Challenge Award (Strict track) for NLP tasks at "The First BabyLM Workshop: Accelerating Language Modeling Research with Cognitively Plausible Datasets", which took place in Suzhou, China, during the 30th Annual Conference on Empirical Methods in Natural Language Processing (EMNLP 2025).

Details about this EMNLP 2025 workshop paper can be found below:

Masked Diffusion Language Models with Frequency-Informed Training

Despoina Kosmopoulou, Efthymios Georgiou, Vaggelis Dorovatas, Georgios Paraskevopoulos, and Alexandros Potamianos

ACL Anthology web link: https://lnkd.in/dwdxgTPZ

Abstract:

We present a masked diffusion language modeling framework for data-efficient training for the BabyLM 2025 Challenge. Our approach applies diffusion training objectives to language modeling under strict data constraints, incorporating frequency-informed masking that prioritizes learning from rare tokens while maintaining theoretical validity. We explore multiple noise scheduling strategies, including two-mode approaches, and investigate different noise weighting schemes within the Negative Evidence Lower Bound (NELBO) objective. We evaluate our method on the BabyLM benchmark suite, measuring linguistic competence, world knowledge, and human-likeness. Results show performance competitive to state of-the-art hybrid autoregressive-masked baselines, demonstrating that diffusion-based training offers a viable alternative for data-restricted language learning.

BabyLM Challenge & Workshop schedule: https://babylm.github.io/Workshop_times.html